Inside the NYPD’s Surveillance Machine

Your face is being tracked. Find out where.

The New York City Police Department’s (NYPD) surveillance machinery disproportionally threatens the rights of non-white New Yorkers.

The expansive reach of facial recognition leaves entire neighbourhoods and protest sites across the city exposed to surveillance via facial recognition, supercharging existing racial discrimination.

This cannot continue. We must ban the scan.

The NYPD used facial recognition technology in 22,000 cases between 2016 and 2019 – half of which were in 2019. Despite mounting evidence that facial recognition technology violates human rights, in most cases, we do not know where, when or why. When Amnesty International and others filed a Freedom of Information Law request, the NYPD refused to provide information.

To help bring about a ban on police use of facial recognition technology, in mid-2021 Amnesty International launched an ambitious effort, Decode Surveillance NYC. The effort mobilised thousands of digital volunteers to find and categorise CCTV cameras throughout the city. We then worked with data scientists, geographers, and 3D modellers to analyse the crowdsourced data.

Our analysis of the Decode Surveillance NYC data reveals an expansive, invasive and discriminatory surveillance machine at the core of the NYPD’s policing tactics.

- Digital volunteers identified more than 25,500 public and private cameras across traffic intersections in New York City.

- Analysis of the data shows that New Yorkers living in areas at greater risk of racist stop-and-frisk policing are likely to be more exposed to invasive facial recognition technology.

- In the Bronx, Brooklyn and Queens, the research also showed that the higher the proportion of non-white residents, the higher the concentration of facial recognition compatible CCTV cameras.

- When New Yorkers marched for racial justice during the Black Lives Matter protests of mid-2020, they risked high levels of exposure to facial recognition. A protester walking a sample route from W4 St/Wash Sq Subway station to and from Washington Square Park is, for example, surveilled for approximately 100% of their journey by pan, tilt and zoom cameras operated by the NYPD.

Facial recognition for identification is mass surveillance and is incompatible with the rights to privacy, equality, and freedom of assembly. In order to ban police use of this dangerous and discriminatory technology and protect our communities, we need you and your community to write to your New York council member, demanding the introduction of a bill that prohibits police use of facial recognition.

If you do not live in New York City, you can sign our global petition calling for a ban on the use of facial recognition for mass surveillance by state agencies and private sector actors across the globe.

Banning facial recognition is a first step toward dismantling racist policing

A digital stop-and-frisk

NYPD surveillance Camera over Queens Boulevard. © Getty Images

Government agencies’ use of facial recognition disproportionately impacts Black and Brown people because they are at greater risk of being misidentified and so falsely arrested. Even when accurate, facial recognition is harmful – law enforcement agencies with problematic records on racial discrimination, are able to use facial recognition software, to double down on existing targeted practices. In March 2021, for instance, New York’s Police Commissioner apologised for the NYPD’s legacy of racialised policing.

In our analysis of stop-and-frisk locations in relation to the camera data identified in this project, we found that areas across all boroughs with higher incidents of stop-and-frisk are also areas with the greatest current exposure to facial recognition. This comes despite the well-documented discriminatory and highly inaccurate nature of both stop-and-frisk practices and facial recognition. It also comes at a moment when it is increasingly clear that there is no evidence to suggest that facial recognition reduces crime, while there is extensive research documenting its harms.

This correlation points to the existence of a persistent form of ‘digital frisking’ that exceeds the boundaries of physical stop-and-frisk. Digital frisking means that certain neighbourhoods are at greater risk of being exposed to facial recognition than others. It means that the effect of policing tactics, such as stop-and-frisk, endure beyond the singular stop-and-frisk incident itself, as communities are constantly intercepted by the NYPD’s surveillance machine.

In the Bronx, Brooklyn and Queens, the analysis also showed that the higher the proportion of non-white residents, the higher the concentration of facial recognition compatible CCTV cameras.

Our analysis comes on the back of research published by the New York Civil Liberties Union in 2019, analysing “stop-and-frisk” incidents across New York City. At the height of the practice in 2011, they found that approximately nine out of 10 frisked individuals were innocent – in that year, the majority of those frisked (53%) were Black. Their data for 2019 showed that in the majority of incidents of frisks (66%) the person stopped was ultimately innocent. Moreover, 59% of these incidents involved Black individuals. The latest data for 2020 continues to show the same trend.

Banning facial recognition protects the right to protest

Black Lives Matter protests

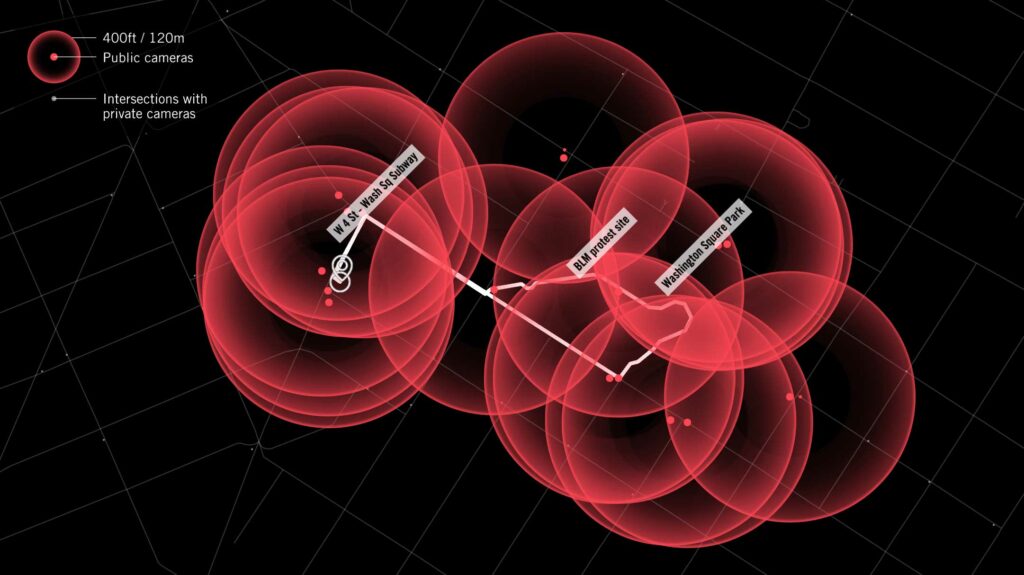

Public cameras on a possible route walked by people attending a BLM protest in Washington Square Park, Manhattan, on 10 June 2020. Graphic: Amnesty International

During the Black Lives Matter (BLM) movement of mid-2020, New Yorkers attending protests experienced higher levels of exposure to facial recognition. For example, a protester walking from the nearest subway station to Washington Square Park could be under surveillance by NYPD Argus cameras for the entirety of their route.

The NYPD uses a surveillance system developed by Microsoft, known as the Domain Awareness System, which gives police officers access to an estimated 20,000 feeds from public and private cameras. These feeds, when combined with other cameras and facial recognition software, can be used to track the face of any individual in New York City.

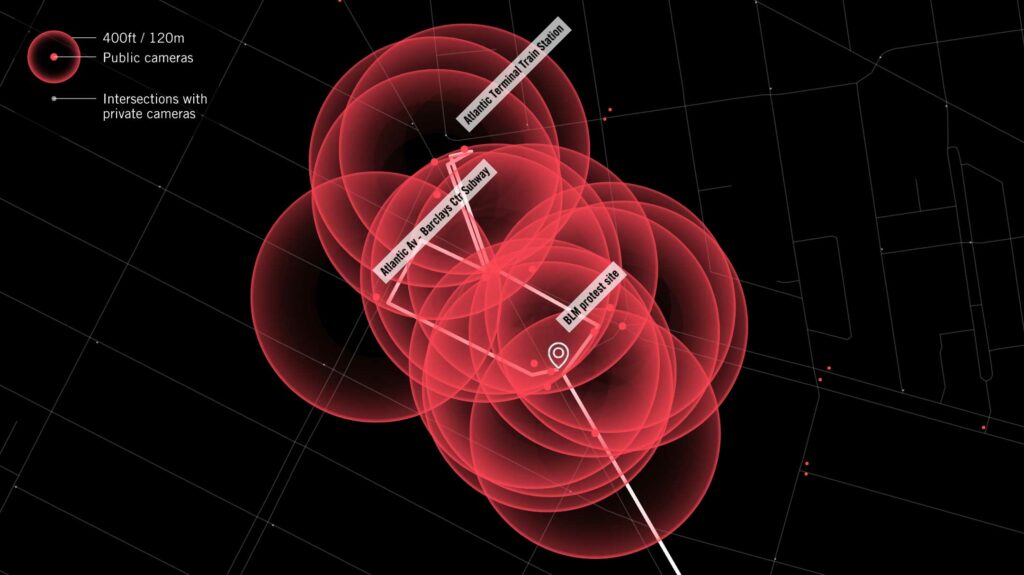

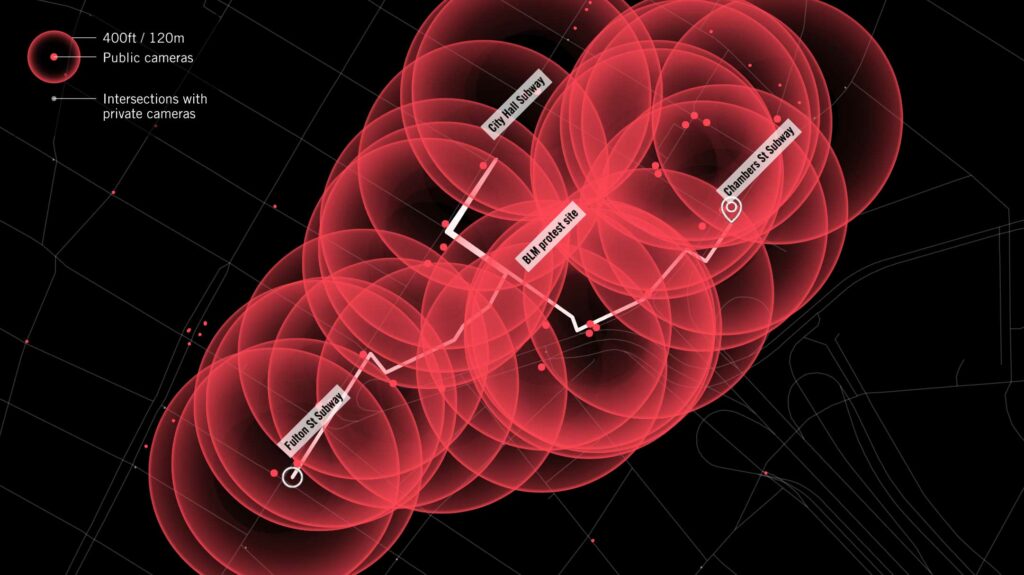

To establish the extent of this, we plotted our camera data against BLM protest sites from mid-2020, including Times Square and the Theatre District, New York City Hall and Washington Square Park in Manhattan, and Barclays Center in Brooklyn.

Public cameras on a route likely walked by people attending BLM protests near Atlantic Terminal, Brooklyn, in June 2020. Graphic: Amnesty International

For this case study, we focused on the public NYPD-operated Argus dome cameras identified in the Decode Surveillance NYC project, excluding private cameras. Since the camera is most likely varifocal and has a range of 134–148 mm, assuming a 4k resolution (equivalent to 22x optical zoom), it can capture detail such as faces for up to approximately 120 metres away (or approximately the length of one block). This helped us understand the radius of exposure for each camera found at protest sites.

Technologies of mass surveillance risk dissuading protest as people fear being identified, tracked, harassed and persecuted, simply by exercising their right to freedom of assembly. In other words, pervasive facial recognition leads to a chilling effect on citizens’ ability to exercise their most basic rights. Their deployment at Black Lives Matter protests against the backdrop of the intensified fight for racial justice is a stark warning of how this technology continues to affect Black and Brown communities in particular. The case of Black Lives Matter activist Derrick ‘Dwreck’ Ingram, whose apartment was besieged by NYPD officers after he was targeted with facial recognition technology at a protest, is just one example of the harassment Black protesters risk facing through the use of facial recognition.

Public cameras on a route likely walked by people attending BLM protests outside New York City Hall, Manhattan, in June 2020. Graphic: Amnesty International

At New York City Hall, where protesters occupied the front lawn of the building from 23–30 June 2020, people arriving and leaving the protest were potentially exposed to facial recognition for 100% of their route.

Likewise, at Barclays Center in Brooklyn, where a high concentration of BLM protests also took place, approximately 95% of the route sampled by Amnesty International was surveilled by facial recognition compatible CCTV cameras. Meanwhile, a protester marching in Times Square would have risked surveillance via facial recognition for 100% of their route.